We are used to the way traditional software is tested: if a feature worked yesterday and the day before, it will most likely work today. The logic is deterministic, bugs are reproducible, and failures can be traced through logs. But with the rise of generative AI and complex machine learning systems, the old rules no longer apply.

Your Python code may be flawless, yet the model can still “break” in production because a user was impatient or asked a question with unusual punctuation.

This is where Stress-Testing AI Models is entering the picture — a discipline that demands a new mindset from testers. If you once checked boundary values in input fields, you now test whether a neural network might spiral under what appears to be a simple question.

From “Functionality” to “Behavior”: A Paradigm Shift

In traditional software, a tester validates a function. In AI systems, a tester validates behavior. The difference is enormous.

AI model stress testing is not a deterministic algorithm. They are statistical systems trained on vast datasets. They do not “execute code” in the usual sense — they generate probabilities. And research consistently shows that these systems can be surprisingly fragile.

Stress-Testing AI Models aims to uncover the limits of that fragility.

- How does a facial recognition system behave if you add barely visible noise to an image (so-called adversarial attacks)?

- How does a customer support chatbot respond if a user is not polite but angry, impatient, and incoherent?

Recent research from Harvard University confirms that models performing brilliantly under “ideal” test conditions can collapse when exposed to real-world chaos.

This is not theoretical. Engineers report cases where AI code passed every unit test, yet in production, caused a 15% revenue drop because the model changed the order of the critical service calls, violating implicit architectural rules. That is the new reality.

Image source: https://www.freepik.com/

What Does “Stress-Testing AI Models” Mean in Practice?

Simply put, it is the process of evaluating a model under extreme, boundary, and unconventional conditions.

Unlike standard load testing — which measures how many requests a server can handle — Stress-Testing AI Models measures how many unusual, noisy, or adversarial inputs it takes before the model begins hallucinating, degrading, or making systematic errors.

| System Type | Traditional Test | Stress-Testing AI Models |

| Computer Vision | Recognize a cat in a clear image | Recognize a cat if the image is noisy, blurred, rotated, or partially occluded |

| Chatbot (LLM) | Answer “What’s the weather in London?” | Answer when the user interrupts, makes typos, demands the impossible, or changes topics every two messages |

| ML Data Pipeline | Calculate credit scoring for typical incomes | Score data when unexpected correlations (e.g., age-gender bias) suddenly emerge |

| Code Generation | Write a sorting function | Write a function without violating company-specific architectural constraints |

Table 1. How stress-testing differs across AI system types.

The goal is not merely to find a bug. It is to uncover hidden vulnerabilities that surface only under specific combinations of circumstances.

The AI Tester’s Toolkit: Methods That Matter

Several mature methods and frameworks already support effective stress-testing. Here are key approaches shaping the field.

1. Adversarial Attacks

This is a classic technique for computer vision and NLP models. The idea is to feed modified inputs that look normal to humans but confuse the model.

This technique exposes how sensitive. models can be to small, structured perturbations.

2. Simulating “Difficult” Users

Modern AI agents are highly sensitive to changes in user behavior. When interacting with polite users, they perform well. Under impatient or erratic communication styles, performance can drop by 30–46%.

Testers must simulate such difficult personalities:

- Skeptical users

- Impatient customers

- Users who constantly change requirements

- Users who type in all caps or mix languages

Emotional robustness becomes a measurable parameter.

3. Causal Data Stress Testing

The severe failures often stem not from random noise but from structured distortions in data.

For example, if demographic data is underrepresented in training datasets, fairness and accuracy may collapse in production.

Testers must learn to simulate these structured shifts rather than random corruption.

4. Macroeconomic Scenario Stress Testing

In finance, Stress-Testing AI Models is already mainstream. Models are evaluated not only on historical data but on synthetic crisis scenarios:

- Sharp GDP contraction

- Market crashes

- Liquidity shortages

How would a default prediction model behave during a severe recession?

This mirrors regulatory stress testing used in banking systems.

Building a Stress Matrix: A Structured Way to Break Your Own Model

One of the most effective additions to Stress-Testing AI Models is the creation of a Stress Matrix. Instead of running isolated experiments, you systematically combine stress factors and observe how the model behaves under compound pressure.

In real life, failures rarely happen because of one variable. They occur when multiple small stressors interact: noisy input + high load + impatient user + slight data drift. Individually, each factor may be harmless. Together, they trigger collapse.

A Stress Matrix helps teams simulate these combinations intentionally.

| Scenario ID | Input Quality | User Behavior | Traffic Load | Observed Effect | Risk Level |

| S1 | Clean input | Polite | Normal | Stable responses | Low |

| S2 | Minor typos | Polite | Normal | Slight latency increase | Low |

| S3 | Clean input | Impatient | Normal | Shorter, less empathetic replies | Medium |

| S4 | Minor typos | Impatient | High | Hallucinated answers increase | High |

| S5 | Noisy input | Aggressive | High | Task failure rate +32% | Critical |

Table 2. AI Stress Matrix for a Customer Support LLM

This type of matrix reveals non-linear behavior. Notice how S4 and S5 expose instability that does not appear in simpler tests.

The key insight: AI systems degrade exponentially, not linearly.

Case Study: How an “Impatient User” Reduces Accuracy by 30%

One of the most surprising areas of Stress-Testing AI Models is the simulation of human traits.

Most test cases assume ideal users: polite, consistent, clear. Reality is different.

The Tau-Bench study (2025) revealed that simply shifting user tone from polite to impatient reduced performance of leading AI agents by 30–46%. The researchers introduced TraitBasis, a method that dynamically alters a virtual user’s personality by adding skepticism, urgency, or confusion.

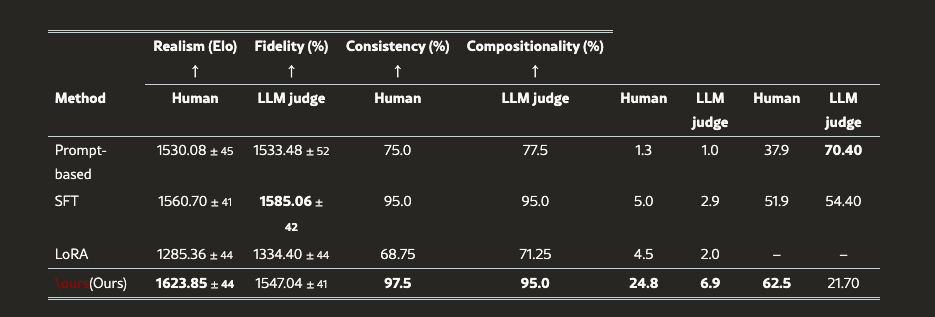

The study also compared human evaluations with automated LLM-as-a-judge scoring (using Claude). While human reviewers rated the proposed method higher in fidelity, consistency, and compositionality, the automated judge often rewarded keyword-heavy responses that lacked true coherence.

This gap highlights a key limitation of automated evaluation. In realistic stress conditions, human judgment remains the most reliable way to measure behavioral robustness.

For a clearer picture of these findings, see Table 3, where the results are presented across realism, fidelity, consistency, and compositionality, comparing human evaluations with LLM-as-a-judge assessments.

Table 3. Source: Impatient Users Confuse AI Agents: High-fidelity Simulations of Human Traits for Testing Agents” (Tau-Bench, 2025) (click to enlarge)

Why This Matters for Testers

You must stop acting like a standard user. You must become the problematic one.

Your stress-test checklist should include:

- What happens if I interrupt the model mid-task?

- What if I change requirements three times in a row?

- Can a support bot maintain empathy under aggressive language?

Simulating the “human factor” under pressure is now a core competency.

New Skill Requirements for AI Testers

Transitioning into AI testing demands new capabilities.

1. Statistical Literacy

You must evaluate more than accuracy. Monitor:

- Precision

- Recall

- F1-score

- Calibration

- Error distribution shifts

2. Data Manipulation Skills

Many stress tests involve dataset modification:

- Injecting noise

- Creating missing values

- Rebalancing distributions

- Generating synthetic samples

You must learn to “break” data realistically.

3. Automation

Running thousands of perturbation experiments manually is impossible.

Python has become the de facto standard for scripting stress scenarios and automating experiments.

4. Model Awareness

You cannot effectively test a model without understanding its architecture.

Knowing the difference between CNNs and Transformers helps identify likely failure points.

Conclusion

The field is wide open. From simulating impatient customers to mathematically analyzing metric degradation, Stress-Testing AI Models requires testers to think like psychologists, hackers, and data analysts at once.

If you want to move beyond simply observing this evolution, consider exploring professional tools and services focused on AI resilience engineering. Organizations investing in systematic stress testing today are shaping demand for tomorrow’s specialists.

Learn red teaming. Study adversarial techniques. Understand not only code, but model behavior.

In the next five years, that ability will not just be valuable — it will be essential.

Leave a Reply