Regression testing is a key practice to prevent changes for bringing negative side-effects in production. Running them could however take a long time and slow delivery of new code. This article introduces change-to-test mapping for regression testing. It is an approach that aims to run only the tests that truly matter, without compromising test coverage.

Author: Yulia Drogunova, https://www.linkedin.com/in/yulia-drogunova-409703113/

Introduction

Regression testing is essential to software quality, but in enterprise projects it often becomes a bottleneck. Full regression suites may run for hours, delaying feedback and slowing delivery. The problem is sharper in agile and DevOps, where teams must release updates daily.

This article introduces change-to-test mapping – a practical approach that runs only the tests that truly matter, without compromising coverage. By focusing on relevance instead of volume, organizations can accelerate delivery while maintaining confidence.

As test suites grow in size and complexity, covering not only functional checks but also integration, API, performance, and security, smarter selection strategies are becoming a necessity rather than an optimization.

Why Now?

The need for smarter regression strategies is more urgent than ever. Modern software systems are no longer monoliths; they are built from microservices, APIs, and distributed components, each evolving quickly. Every code change can ripple across modules, making full regressions increasingly impractical. At the same time, CI/CD costs are rising sharply. Cloud pipelines scale easily but generate massive bills when regression packs run repeatedly. For many organizations, testing has become one of the largest operational expenses in delivery.

The Regression Pressure

In many projects, even a minor code change may trigger hundreds or thousands of automated tests. This creates:

- Long-running CI/CD pipelines

- Delayed feedback for developers

- Bottlenecks in delivery workflows

In industries such as banking, e-commerce, and telecom, regression packs can easily grow into tens of thousands of tests – turning every release into a costly and time-consuming process. Beyond cost and time, excessive regressions also affect team behavior: developers may avoid running tests locally, hotfixes can be delayed due to long validation cycles, and product managers may hesitate to roll out incremental updates. What should be a safeguard often becomes a bottleneck, limiting innovation and slowing time-to-market.

Change-to-Test Mapping: A Pragmatic Approach

The core idea is simple: “If only part of the code changes, why not run only the tests covering that part?”

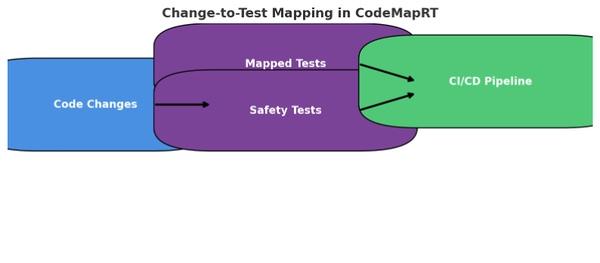

Change-to-test mapping links modified code to the relevant tests. Instead of running the entire suite on every commit, the approach executes a targeted subset – while retaining safeguards such as safety tests and fallback runs.

What makes this approach pragmatic is that it does not rely on building a “perfect” model of the system. Instead, it uses lightweight signals – such as file changes, annotations, or coverage data – to approximate the most relevant set of tests. Combined with guardrails, this creates a balance: fast enough to keep up with modern delivery, yet safe enough to trust in production-grade environments.

How It Works in Practice

A. Foundation (set up once, keep up-to-date)

Before selective execution, maintain a mapping between code modules and tests. In CodeMapRT this is done via:

- Annotations/metadata in tests (e.g., @CoversModule(“auth”))

- Configuration files that define module→test relationships

- Coverage data showing exercised files

Treat the mapping as core infrastructure: versioned, reviewed, and updated as the system evolves.

B. Runtime Flow (per commit/PR)

When a commit or pull request is submitted, CodeMapRT performs the following steps:

- Detect which files or modules were modified (e.g., via Git diffs).

- Select the tests mapped to those modules (via annotations, coverage data, or configuration).

- Add safety tests – defined in a dedicated list (e.g., safety-tests.txt) and always included on top of mapped tests. These cover critical business flows such as authentication, payments, and integrations, ensuring essential paths are validated even when only a subset of the suite runs.

- Run the filtered suite in CI/CD.

- Use fallback full regression for major milestones.

This hybrid model balances efficiency with reliability.

Example of mapping code changes to mapped and safety tests in CodeMapRT before execution in CI/CD.

Several techniques can further strengthen the approach:

- Static analysis tools (e.g., SonarQube, Semgrep) could be used to identify indirect dependencies that may not be obvious from Git diffs alone.

- Dynamic coverage tracking during test execution might continuously update the mapping, ensuring that newly added or refactored tests are automatically linked to relevant modules.

- Risk-based prioritization can potentially be layered on top of mapping, so that tests connected to high-criticality modules (e.g., payments, authentication, healthcare data) are always executed first, regardless of change size.

- Parallel execution in CI/CD would allow even a filtered suite to complete in minutes, by scaling across distributed runners.

- Integration with modern orchestration platforms (GitHub Actions, GitLab CI, Jenkins pipelines) could help embed change-to-test mapping seamlessly into existing workflows.

- Incremental adoption strategy may be a practical path forward: starting with less critical modules and gradually extending to mission-critical areas as confidence grows.

Such improvements demonstrate how change-to-test mapping can grow into a comprehensive approach that balances efficiency with confidence in software quality.

Real-world Illustration

To validate the approach, a demo project with 50 automated tests was created.

- Full regression: 50 tests executed on every commit

- Selective regression with mapping: 28 mapped tests + 5 safety tests = 33 total

- Outcome: a 34% reduction in executions, even in a small-scale demo

Even in a modest setup, this shows measurable savings. In enterprise environments with thousands of tests, where only a fraction of the codebase changes per commit, change-to-test mapping can cut 70-90% of unnecessary executions – saving hours of pipeline time and reducing CI/CD costs significantly.

Benefits in Practice

- Accelerates feedback loops → results arrive in minutes, not hours, enabling developers to validate changes during the same working session rather than waiting for overnight builds.

- Cuts infrastructure waste → leaner pipelines and smaller CI/CD bills, as only relevant subsets of tests are executed. This is especially valuable in cloud-based environments where every extra minute of compute translates into direct costs.

- Supports frequent releases → regression no longer blocks daily delivery, aligning testing with modern Agile and DevOps practices that demand multiple deployments per day.

- Maintains confidence → safety tests and fallback runs prevent missed issues, ensuring that coverage is not sacrificed for speed. Teams retain the ability to run full suites when business risk is high.

- Scales to enterprise systems → adaptable to large, complex codebases with thousands of services and dependencies, making it suitable for industries like banking, telecom, and healthcare.

- Improves developer productivity → less waiting on pipelines means more time spent building features, fixing issues earlier, and reducing context-switching.

- Supports sustainability goals → reducing redundant test executions also lowers energy consumption in large CI/CD clusters, contributing to greener IT operations.

- Delivers measurable savings → for example, in an organization with 10,000 automated regression tests, reducing execution volume by just 50% can save hundreds of hours of machine time each month, translating into both cost savings and faster release cycles.

Best Practices & Considerations

- Start small, scale gradually → begin with one module or service to validate the approach before extending it across the entire system.

- Define clear ownership → ensure that teams know who maintains the mapping metadata (e.g., QA, developers, or a shared DevOps function). Without ownership, mappings risk becoming outdated.

- Automate mapping updates → integrate coverage tools or static analysis to keep test-to-code links current, reducing the need for manual maintenance.

- Monitor accuracy continuously → track false negatives (missed tests) and false positives (unnecessary executions) to refine the strategy and increase trust.

- Balance speed with safety → maintain policies for when to trigger full regressions, such as before major releases or after high-risk architectural changes.

- Leverage analytics → gather metrics on test execution time, skipped tests, and defect leakage to measure ROI and demonstrate business value.

- Prepare cultural alignment → educate developers and QA teams on how selective regression works so they trust the results and don’t default to full runs “just in case.”

- Plan for integration with other practices → combine change-to-test mapping with techniques like test impact analysis, flaky test detection, and parallel execution to maximize efficiency.

Conclusion

Regression testing does not have to be an unavoidable slowdown. By mapping code changes to relevant tests, teams can accelerate delivery while preserving confidence in quality. As test suites continue to grow, approaches like change-to-test mapping will be key to balancing speed, cost, and quality in software delivery.

QA teams can start experimenting with change-to-test mapping in smaller projects and gradually expand adoption to larger pipelines. Even partial implementation – such as introducing lightweight annotations or selective test triggers – can already provide measurable savings and shorten feedback loops. Over time, organizations can evolve these practices into a robust strategy that scales to thousands of tests across enterprise systems.

The long-term potential is significant: reduced operational costs, improved developer productivity, and a sustainable testing process that keeps pace with agile and DevOps delivery models. As testing complexity continues to rise, selective and intelligent approaches will not only be beneficial but essential for competitive advantage.

As an open-source implementation of this idea, I created CodeMapRT, which demonstrates how change-to-test mapping can be applied in real CI/CD environments. It serves as both a proof of concept and a practical tool that teams can adapt, extend, and integrate into their own pipelines – helping to reshape regression testing into a driver of speed rather than a source of delay.

GitHub Repository: https://github.com/yuliadrogunova/codemaprt

About the Author

Yulia Drogunova is a a Senior QA Engineer with extensive experience in FinTech and enterprise systems. She created CodeMapRT, a framework for change-to-test mapping that streamlines regression testing. Yulia publishes articles, contributes to QA innovation, and engages in professional communities to promote modern approaches in software testing, automation, and quality assurance.