Selenium is a widely used open source tool used for software testing that provides a record/playback IDE for authoring software tests without learning a specific test scripting language. In this article, Brian Van Stone provides some best practices on how to successfully use Selenium for your test automation efforts.

Author: Brian Van Stone, QualiTest Group, http://www.qualitestgroup.com/

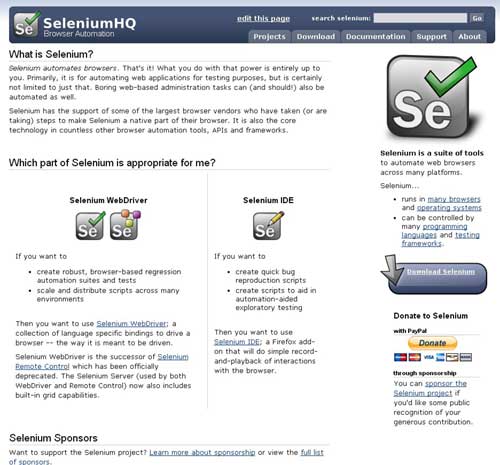

Selenium is currently one of the hottest open source tools on the market for test automation. With an active push to move applications to thin clients, Selenium is becoming increasingly capable of addressing the test automation needs for an entire organization. However, many organizations find themselves lost when determining their focus in attempting to establish a Selenium practice. While there is no secret recipe to a Selenium implementation, there are several best practices to consider during any attempt to successfully leverage Selenium.

Consider Organizational Standards

When implementing a new tool or practice within an organization, you need to evaluate the tool in terms of being right for the job, the business, and the teams that will be using it. Selenium operates only on web based applications. A common mistake made by companies is to adopt Selenium for its automation practice, only to discover that it does not provide the coverage that is needed. Addressing this issue late into implementation can lead to omission of test coverage or the acquisition of additional tools, either of which can cause headaches, inefficiencies, or missed bugs.

After the feasibility of Selenium for your applications is resolved, you must evaluate how well Selenium fits into your testing strategy as a technology. While Selenium offers language bindings for a large selection of popular programming languages, a staff that is only familiar with VBScript or another Selenium-unsupported language will experience difficulties in smoothly transitioning. Beyond that, if the decision is made to support a technology stack that isn’t currently an organizational standard, this involves a lot more than just learning a new language. For example, setting Java as a standard will entail installing new software like IDEs on developer platforms, managing software installation and updates for the Java run time environment on client machines, determining a build and release platform, and revisiting source control management solutions, amongst a potential host of other considerations.

Construct a Framework

One of the simplest, and yet most naïve, ways to use Selenium is to author a script for every test. While this may seem to be the most obvious way to achieve full test coverage, this approach will lead to future disasters. To strive towards tests that are more stable, faster to develop, more consistent, more readable, and more change tolerant, it is imperative that a framework either be selected or developed. While this can lead to more time initially spent on development, the future payoff is enormous.

Create Code Standards

An aspect of any test automation initiative that is too often overlooked is the need to maintain standards surrounding code quality. Testers can host code reviews, while developers can and should be involved in test automation. Adhering to already existent naming and documentation conventions is important, and source control is an absolute must. Equally important, however, is the need to produce reusable code. Test automation needs to be developed in the same way that a developer would develop code: take a piece of logic, abstract it to a general use case, and prepare it for mass production. Consider educating test automation developers in foundational software development techniques, just as you would with other development personnel.

Externalize Configuration

In almost every automation initiative ever attempted, someone has been displeased with the expensive process of maintaining automation scripts. A common disaster story is that a new build is released and automation stops working. Unfortunately, this is, to an extent, the nature of the beast; however, this does not make the situation hopeless. Measures can be put into place to mitigate time wasted in maintenance. Test automation should be consistent, adaptable, and configurable. This has several implications on automation development:

- Attempt to make automation self-aware. Anticipate dynamic application functionality and try to accommodate it whenever possible. Sometimes this means handling several concrete scenarios and other times this means using intelligent object recognition to handle a wider variety of potential changes. Using Firebug, Chrome developer console, or another browser plugin to generate XPath expressions is almost never a suitable solution.

- Expose configurable values whenever possible (but only when it makes sense to do so). While this can, and often does, include parameters to small units of automation logic, externalizing object identification is critical. Unlike other market leading automation tools, Selenium offers no manageable object identification strategy at all. The vast majority of maintenance issues result directly from changes fixed by tweaking object identification.

- Apply those naming conventions and other standards that were decided on before development initiation, especially to any externalized configuration. Do not let this slip by in code review. While it may seem trivial, a failure to adhere to the agreed-upon standards can turn what should be a few minutes of maintenance into hours of headaches and debugging.

- Always remember that hard coding is easy coding. Injecting important potentially configurable values directly into the source code will necessitate code change for maintenance in the future.

Implement Logging and Reporting

The lack of logging and reporting is perhaps regarded as the largest disadvantage of Selenium. Test automation is nearly useless without test results. Through logs and reports, we can determine the pass or failure status of tests, assess test coverage, analyze failures, debug automation scripts, collect debug information to file with a bug report, make screen captures accessible, and so much more.

When implementing features to provide this coverage, it is best to separate the ideas of logging and reporting. Logging is best reserved for the technical details of test automation and served in a flat file or .txt format. This is where you should find stack trace reports for errors, details of actions being performed against the application under test, general I/O activity, and database interactions. The log should essentially read as a “geeky script” of the test automation execution.

Test reports are best served as HTML or some other portable and readable format. This is where we can apply color coding to results, generate graphs, or provide links to other relevant files like screen shots, logs, and test data. Reports should be concerned with high level execution details. What test cases were run? In what environment were they run? How can I measure my passes and failures?

Remember, the first thing the client will see after executing your automation is the test report. Make sure it serves its purpose well enough to also be the last.

About the Author

Brian Van Stone has been working in the software testing industry for five years in various roles as a test automation developer and automation framework architect. He has experience with a variety of automation tools such as Selenium, Appium, SoapUI, eggPlant, QTP and TestComplete.