Code coverage is a metric that gives the degree to which the source code of a program is tested by a particular test suite. This metric is provided by open source or commercial code coverage tools and displayed in quality dashboards like SonarQube. There are many discussions about the right level of code coverage. In his book Quality Code, Stephen Vance explains the limit of this metric.

Additionally, code coverage can deceive. Coverage only shows you the code that you executed, not the code you verified. The usefulness of coverage is only as good as the tests that drive it. Even well-intentioned developers can become complacent in the face of a coverage report. Here are some anecdotes of innocent mistakes from teams I have lead in the past that let you begin to imagine the abuse that can be intentionally wrought.

- A developer wrote the setup and execution phases of a test, then got distracted before going home for the weekend. Losing his context, he ran his build Monday morning and committed the code after verifying that he had achieved full coverage. Later inspection of the code revealed that he had committed tests that fully exercised the code under test but contained no assertions. The test achieved code coverage and passed, but the code contained bugs.

- A developer wrote a web controller that acted as the switchyard between pages in the application. Not knowing the destination page for a particular condition, this developer used the empty string as a placeholder and wrote a test that verified that the placeholder was returned as expected, giving passing tests with full coverage. Two months later, a user reported that the application returned to the home page under a certain obscure combination of conditions. Root cause analysis revealed that the empty string placeholder had never been replaced once the right page was defined. The empty string was concatenated to the domain and the context path for the application, redirecting to the home page.

- A developer who had recently discovered and fallen under the spell of mocks wrote a test. The developer inadvertently mocked the code under test, thus executing a passing test. Incidental use of the code under test from other tests resulted in some coverage of the code in question. This particular system did not have full coverage. Later inspection of tests while trying to meaningfully increase the coverage discovered the gaffe, and a test was written that executed the code under test instead of only the mock.

Code coverage is a guide, not a goal. Coverage helps you write the right tests to exercise the syntactic execution paths of your code. Your brain still needs to be engaged. Similarly, the quality of the tests you write depends on the skill and attention you apply to the task of writing them. Coverage has little power to detect accidentally or deliberately shoddy tests.

Source: “Quality Code: Software Testing Principles, Practices, and Patterns”, Stephen Vance, Addison-Wesley

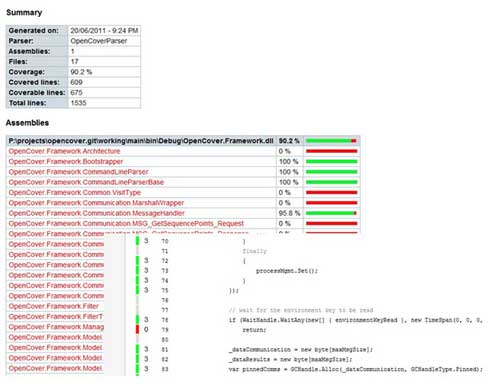

image source: https://github.com/OpenCover/opencover/wiki/Reports

Reaching 100% code coverage is difficult and might be even a counterproductive goal. What a project need is meaningful tests and not just tests that execute all the lines of code without trying to detect issues. We have however to keep in mind that code coverage is a useful indicator of the testing activity developed around code, especially at the unit testing level. If a high level of code is not a guarantee for the quality of the product, a low level of code coverage is a clear indication of insufficient testing.

Further reading

* Minimum Acceptable Code Coverage

* Perform Code Coverage Analysis with .NET to Ensure Thorough Application Testing